Analyzing Antibody Testing Methodologies

Hypothesis testing for paired data

Introduction

Recently, we were asked by a client in the medical testing industry to help evaluate their methodologies for a certain antibody test. It was possible to perform two different tests: the first being a more traditional option, while the second was a newer technology. Our goal was to help determine if the two methodologies could be used interchangeably or not as the newer technology was significantly cheaper.

In this post, we walk through our approach to this analysis, including the technical code (written using the R programming language) used to reach the final outcome. We are highlighting R here, but have used several different programming languages for various past case studies (for another technical writeup analyzing unexpected NBA outcomes - written in Python - check out the post linked below)

Load and inspect the data

After loading the antibody data, we can see that we have various anonymous patients, their measured antibody levels, and which test methodology was used to measure the antibody levels (out of the two possibilities).

library(tidyverse)

theme_set(theme_bw(base_size = 12))

antibody_data = read_csv("Antibody Data.csv")

head(antibody_data)We can also see that in most cases, the two methodologies were performed for each patient. In statistical terms, this could be referred to as paired data because we have a measurement using both methodologies for each patient (or the majority of patients).

A quick scan of the parity plot comparing the two methodologies visually indicates that Method 2 may have higher reported antibody values than Method 1. To confirm this, however, we’ll need to perform a hypothesis test to understand how likely that would be to occur by chance given the amount of separation and the sample size.

paired_data = pivot_wider(antibody_data, names_from = c("Method"), values_from = c("Antibodies"))

colnames(paired_data) = c("Patient", "Method 1", "Method 2")

paired_data |>

ggplot(aes(x = `Method 1`, y = `Method 2`)) +

geom_point() +

geom_abline(intercept = 0, slope = 1) +

labs(

title = "Antibody Measurements for Two Different Testing Methodologies"

)Statistical Testing

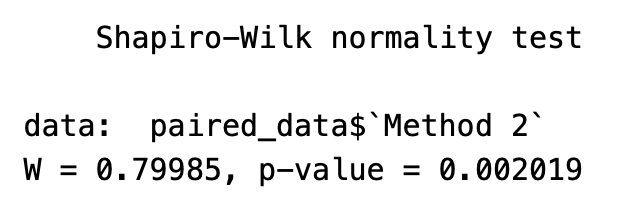

When working with paired data, the natural approach to determine whether the testing methodologies were similar or not is to use a paired t-test. The problem in this case, however, is that our samples appear to be highly skewed from normality while also being relatively small in size. Both of these conditions violate the assumptions required for a paired t-test, which assume the data originates from a normal distribution or the sample size is large enough that the central limit theorem will help to overcome deviations from normality.

antibody_data |>

ggplot(aes(x = Antibodies)) +

geom_histogram() +

facet_wrap(~Method, ncol = 1)

shapiro.test(paired_data$`Method 1`)

shapiro.test(paired_data$`Method 2`)One of the best approaches in this case is to instead use a non-parametric statistical test such as a permutation test.

Just like with a standard paired t-test, we are interested in the average of the differences between each group, or in this case, the average difference in antibody results between the two methodologies. Our null hypothesis assumes that there is no difference in antibody results between the two methodologies, thus when we take the differences for a given patient and then average those differences, we would expect to get 0 if that null hypothesis is true. If we instead get something relatively far from 0, we may have enough evidence to reject the null hypothesis implying there is a difference in the test methodologies.

To perform the permutation test, we will first start by determining what the average difference is in values we see from our data, which turns out to be -0.15.

differences = paired_data$`Method 1` - paired_data$`Method 2`

avg_difference = mean(differences[!is.na(differences)])Next, we run a simulation assuming that the order of each test is random and does not matter. With enough simulations run (5,000 in this case), we can start to get a distribution of what the possible average differences in antibody levels would look like under the null hypothesis.

pairs = cbind(matrix(paired_data$`Method 1`, ncol = 1), matrix(paired_data$`Method 2`, ncol = 1))

set.seed(1234)

num_permutations = 5000

permutations_diffs = numeric(num_permutations)

for (i in 1:num_permutations) {

temp = numeric(dim(pairs)[1])

for (j in 1:dim(pairs)[1]) {

temp[j] = diff(sample(pairs[j,]))

}

permutations_diffs[i] = mean(temp[!is.na(temp)])

}Finally, once we have this distribution, we can calculate the probability (p-value) of seeing our actual difference given this new simulated sample that assumes the null hypothesis to be true. To calculate that probability, we can find the total number of cases which had an average difference that was as extreme or more extreme (positive or negative direction since we want a two-sided hypothesis test) divided by the total number of cases. The probability was found to be ~0.01, which using a traditional significance level of 0.05, would be considered a very statistically significant outcome.

p_value = sum(abs(permutations_diffs) >= abs(avg_difference)) / num_permutations

tibble(results = permutations_diffs) |>

ggplot(aes(x = results)) +

geom_histogram() +

geom_vline(xintercept = avg_difference, color = "red") +

geom_vline(xintercept = -avg_difference, color = "red") +

labs(

title = "Permutation Test Results",

subtitle = "Results of simulating the null hypothesis that there is no difference in antibody measurements\nfor the two different testing methodologies. The red lines shows where the actual observed\noutcome was found.",

x = "Average Difference in Test Methodology"

)Conclusions

In this study, we were interested in determining whether two different methodologies for measuring antibodies were producing different outcomes. Given the small sample sizes and the lack of normality in the data, a paired permutation test was used assuming a null hypothesis that there was no difference in results from the testing methodologies. The outcome of the hypothesis test showed strong evidence to reject that null hypothesis in favor of the alternative that in fact there is a difference in measured antibody levels between the two testing methods.

The results of this study have since been used as part of ongoing research to better adjust the testing parameters associated with the second methodology. As more alignment is reached between the two methodologies, the second may start to be used in place of the first as it is a cheaper test to perform, which will result in significant cost savings for the client provided it obtains the same level of accuracy.