For a “new” company, Pontem has been quite busy in 2023 with several deepwater (offshore) startups, including mega-projects and very technically-challenging developments. There is something both terrifying and exhilarating about being part of a team that brings on a new well, particularly at the flowrates for some of these projects in the portfolio. It’s the point where all of the planning and analytical work comes to a head. Days, months, even years of HAZIDs, HAZOPs, PSAs, PSSRs (Pre-Startup Safety Review), and SUOPs (Start-Up On Paper) all come down to that moment where you start cracking chokes. It’s Go Time.

If you start me up, I'll never stop…Never stop, never stop, never stop

You make a grown man cry….

Before continuing, I want to go back to the first paper I ever published (SPE Conference, 2001). Coming from a background in refining / FCC units, I was new to the offshore space and the industry was generally still in its infancy with (ultra)deepwater. So, quite a bit of fundamental work was occurring in parallel with design decisions and actual operations. Also, I thought the title was clever.

The paper was designed to talk about what everyone should consider in their development strategies and how that would translate to actual operations. The only problem was - I had not operated anything! Everything was theoretical (putting that Michigan education to use) and seemed to check out. But, in a world were input data is often massaged and output data looks good “on paper”, it is easy to opine on what should be done…from someone who had never done it. At the conference, I remember being critiqued in the Speaker’s Room by a seasoned veteran, made worse by a condescending French accent, for a lack of “have you considered X, Y, and Z in the field?”. In retrospect, it was probably good advice (I forget what was said specifically), but the message I internalized was along the lines of “you don’t know sh*t yet, kid”. I gave the speech - it went fine - but I was still fuming when I got back from San Antonio. Walked into my apartment, dropped my bag, and punched a hole through my bedroom door. My roommate Jason and I weren’t getting our security deposit back anyways, but that pretty much sealed the deal.

The moral of the story (other than learn some impulse control…) was simple: all of the analytics, formulas, simulations, etc. are great…but until you have put them into practice, how can you be sure you have it right? Fast-forward back to 2023.

…because as we know, there are known knowns; there are things we know we know. We also know there are known unknowns; that is to say we know there are some things we do not know. But there are also unknown unknowns—the ones we don't know we don't know. - Donald Rumsfeld

Greenfield vs. Brownfield

For brand-new developments (“Greenfield”), the risks are primarily related to the unknown. How will the reservoir perform? How will the facility perform? Will the pipeline thermal behavior match the insulation specifications? How good are the predictive models? Are the meters accurate? These can be exciting because you have a blank sheet of paper, a new car to take for a test drive. However, the uncertainty surrounding the design, performance and uncertainty in the data quality is nerve-racking. I don’t want to break the new toy right when we take it out of the box!

On the other hand, bringing wells into existing infrastructure (“Brownfield”) has its own set of unique challenges. Often, we have performance history of the basin, facility, pipeline, etc. Quite a few “knowns”. But, we also have constraints such as maintaining existing production (revenue), hydraulic / capacity limits, chemical injection availability, commercial specifications, etc. By fixing some of our variables, we give the illusion of control - we have minimized the “unknowns”. But this often means…to quote another Rolling Stones song…You can’t always get what you want. We don’t have all of the tools in our toolbox available. Its often time to really put our engineering know-how to use and solve the problem that is presented to us. A delicate dance between the theoretical and the practical.

The remaining discussion will focus on three startups from this year. All are different and have unique challenges, but they have the same commonality of being technically daunting (this is Pontem’s reason for being, our raison d'etre). Specifically, they all include elements of:

Brownfield (existing facilities)

Deepwater

Long-distance subsea tiebacks (limited telemetry between wellhead / platform)

High productivity wells (>$1MM/day individual well revenue)

Pushing limits of engineering model accuracy

Environmental considerations (limiting overboard water discharge, flaring, etc.)

Spread out the oil, the gasoline

I walk smooth, ride in a mean, mean machine

Start it up

Field A (Brownfield / Gas)

For an existing facility, one of the challenges is whether to complete / clean-up future (new) wells to a rig or through the existing facility. While cleaning up the well to a rig offers a more robust and ‘simple’ startup when flipped through the facility, there is a price. And, for deepwater developments, a hefty one. Rig costs in excess of $400k / day offer a daunting question: how ‘clean’ does the well need to be?

Complicating the decision for many deepwater gas developments is that facilities often employ a closed-loop chemical injection system (mono-ethylene glycol, MEG) for hydrate control. That is, once fluids are brought back to the facility, certain species within the completion fluids (i.e. salts), as well as free iron from the pipeline (Fe2+) can precipitate and accumulate within the MEG system, potentially fouling the topside equipment, as well as being recirculated back through the subsea system, leading to fouling at the tree / flowline, and then subsequently returning back to the facility.

Other than the cleanliness of the well, a big advantage of flowback to the rig is the collection of fluid samples and other well-specific data, prior to bringing the well on-line into the existing facility. Gathering samples/data as part of a commingled production stream (in the absence of any test separator) complicates the telemetry process on the new well. But, as is always the case with data: what is the value of the data collected and at what cost are you willing to pay to collect it? And, if we are waiting for the well to clean-up to the facility over time before we get accurate fluid samples, we are often trying to troubleshoot the airline while we are flying it!

In this particular case, the decision was made NOT to clean up to the rig, but instead flow it directly to the facility. This would be the first well brought into the existing facility in this manner (all prior wells had been cleaned up via rig). This meant processing all of the fluids - well/reservoir + completion fluids - through the existing process trains that were already producing and selling gas.

One additional challenge is the selection of the completion fluids. Weight of the brine is largely selected based on hydrostatic pressure for overbalance pressure control, resulting in a given weight, or “ppg” (pound-per-gallon). However, given that the fluids are made up largely of water, the risk to deepwater operations - at operating pressures and ambient seafloor temperatures (~40°F) - is hydrates if there is insufficient weight (salinity) of the completion fluid to inhibit.

From a flow assurance perspective, selection of the appropriate completion brine considers several factors:

Sufficient total salinity at target weight for hydrate control (shut-in tubing pressure)

Monovalent vs. divalent salts, where divalent salts (i.e. calcium-based fluids) may pose significantly higher fouling risks through MEG regeneration/reclamation units due to how they precipitate through the pre-treatment units.

Single-salt vs. dual-salt, which may be required to achieve the necessary hydrate suppression / weight combination, but may present more QA/QC challenges to ensure the final mixture gives adequate hydrate suppression.

Impact of methanol (MeOH) injection during startup on salt precipitation from the completion brines, which can occur with high MeOH concentrations in high salinity brines.

Predictive tools (i.e. PVTSim or Multiflash, which was used in this case) offer reasonable predictions on hydrate suppression for a given brine composition. These can also be vetted with laboratory testing to confirm the model accuracy, which tends to deviate (model vs. actual) as the total salinity increases.

Furthermore, the completion fluid volumes ultimately produced back to the facility are often “TBD”, so liquid handling equipment must be robust to various outcomes, ranging from “no liquids” to “tsunami of liquids”. Additional early telemetry can be gained through multiphase flow modeling to predict rate of liquid arrival rates, as well as observing the pressure signature expected during the startup (i.e. pressure increases with liquids moving up any slopes/escarpments, etc.)

A robust ‘start-up on paper’ (SUOP) exercise, designed to predict ranges of completion volumes and arrival rates may offer a “cone of uncertainty” or “swim lane” to assist operations on what may be coming. In this way, real-time data (pressure / temperature, gas chromatograph, liquid rates, etc.) can be used and compared against the theoretical outcomes to provide early warning signs if something is out of the ordinary. For a short pipeline system that may be well-instrumented, this may not be necessary….but for a 100+km system with only a single inlet/outlet measurement, having some awareness of what is happening inside the pipeline is crucial. So, not solely relying on a model prediction, but using it as a tool to assist operations as a ‘second opinion’.

Outcome: Ultimately, the decision to flow the well back to the facility was successful and production was maintained whilst accommodating the new well. Model predictions were in-line with actual field performance. The integrated approach to handling an “unknown” production scenario from the new well (without the downtime and cost associated with a flowback to a deepwater rig) enabled a quicker and lower-cost (life-cycle) startup, setting a possibly roadmap for future wells.

Ride like the wind at double speed

I'll take you places that you've never, never seen

Start it up

Field B (Brownfield / Gas)

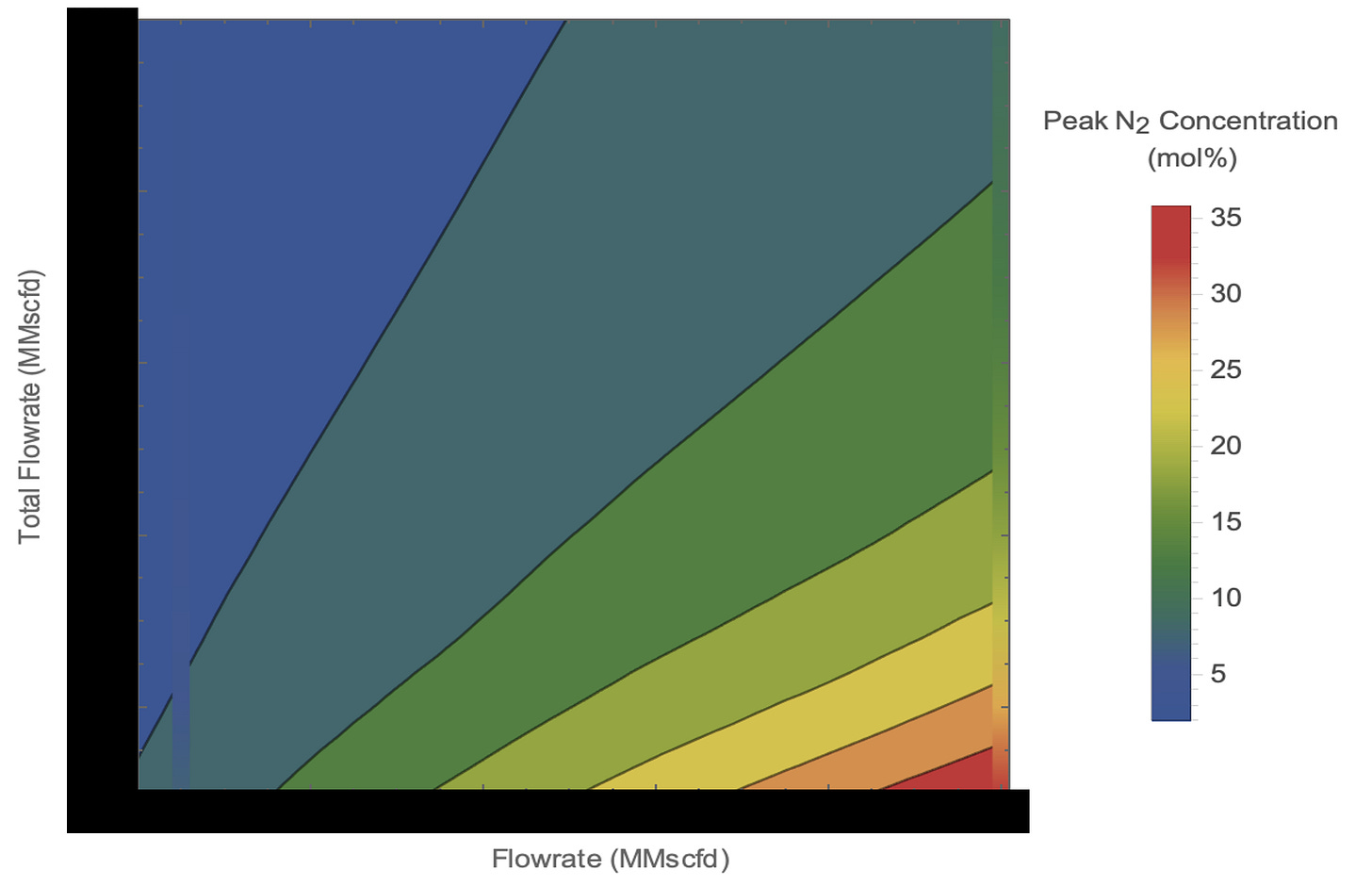

The second startup was slightly more challenging than the first one for a few reasons. Aside from being a longer distance, this entailed commissioning a new pipeline segment that remained filled with nitrogen (N2) from the initial dewatering, which would then flow into an existing / operational pipeline, which we can assume is filled with methane (CH4). The potential need to flare off-spec gas (high N2 content) would severely limit operations, have environmental (and social) concerns, as well as commercial implications. The prize to complete a non-flaring / on-spec startup to control the N2 content was of utmost importance.

Transient multiphase models have been around for 25+ years to model gas/liquid behavior in pipelines, accounting for slippage and partitioning between the bulk phases. But, the technical validation for gas/gas slippage (N2/CH4) is less understood. Theoretically, N2 should move similar to a plug, with some small dispersion on either side of any pipeline segment that may be N2-filled. This would imply near piston-like displacement and some predictability in the outlet receiving end gas composition.

Model predictions (using OLGA) show a similar trend to the theoretical observations, but impacted by how the pipeline is discretized. This is not unexpected, as any numerical simulation model is subject to grid/mesh fidelity to some extent, since the calculations are done within the ascribed volume boundaries. But ultimately, the modelling approach must translate to the field concern: What is the actual end dispersion that may occur in the field? If there is a significant ‘smearing’ effect and high residual N2 concentrations, the risk of producing off-spec gas (requiring flaring) could be substantial.

While it would be great to discretize the pipeline down to very small sections, the numerical instability and increased computation time makes this solution impractical. So, a compromise (100m) solution was opted for.

The specific challenge progressed from the discretization (model) to how to control the incoming N2 rate (actual). Contractual specifications on the gas limits to maximum N2 content to a much smaller number than was shown above, much less than the >10% that was possible for the planned startup rates. A novel approach was taken to run a wide range of startup scenarios for the new well + existing production, with a goal to find a combination that allowed the combined sales gas to meet the necessary contractual specification. An interactive tool was provided to the operator to allow them to evaluate the various combinations and fit those within the overall well startup and surveillance plan. This was crucial as the N2 “packet” was expected to move down the pipeline intact. In other words, once that packet was launched from the well, there was no turning back.

On the surface, this appears fairly simple (again, if you believe the models). However, given this is a new well being brought into an existing facility, there are a number of things that could go wrong and interrupt a “smooth” startup. Recalling the completion fluid discussion from Field A, the unknown volume of completion fluids (not to mention residual hydrotest water following the N2 dewatering purge), adds another variable - liquids - to the gas/gas dispersion modeling. How would the additional phases impact the gas dispersion behavior?

Additionally, surveillance of the new well dictates that not only is the well brought up slowly, there are several areas to gain insight into the reservoir behavior through Pressure-Transient-Analysis (PTA), which necessitates hold / shut-in periods, making a ‘simple’ calculation quite dynamic over the duration of the actual startup. If the pipeline is shut-in while N2 remains in the system, what happens to it? Does it stand still, waiting patiently at attention and not mixing, before it resumes its orderly march back to the facility?

Outcome: So, how did it all turn out….pretty much as predicted! The N2/CH4 dispersion was in-line with model (OLGA) predictions and the behavior of the nitrogen within the pipeline was nearly a “packet-like” train car migrating down the pipeline. Of course, sometimes it is better to be lucky than good, as the startup proceeded without interruption during the early phase N2 purge, so we never stress-tested the potential for N2 dissipation within a static/shut-in pipeline. The well was brought on-line and the N2 behavior was accurately predicted, allowing production to be blended/commingled into the facility without any off-spec gas or need for flare.

The confirmation of the modeling accuracy and ability to predict future behavior (for subsequent wells) may enable an even more aggressive (i.e. shorter ramp-up) approach, which directly results in further cost-savings. The confidence in the modeling being representative of the field behavior results in less potential curtailment/deferrals on flowrate, directly increasing gas sales. Not to mention the added benefit that flaring would not be necessary.

As an aside with respect to flaring…sizing a flare unit for natural gas (CH4) is not the same as sizing it for nitrogen (N2). For the same mass rate sizing basis:

Methane: Molecular Weight - 16.04, 0.2217 kg/s / MMscfd, 100 MMscfd CH4

Nitrogen: Molecular Weight - 28.01, 0.3873 kg/s / MMscfd, 57 MMscfd N2

Be aware of the limits when controlling to a gas flowrate (volumetric), particularly when setting well target production rates if putting N2 through the flare system during commissioning.

I've been running hot

You got me wrecking gonna blow my top

If you start me up

Field C (Brownfield / Oil)

We are typically involved in early-stage project engagement, at the field development level that set out the course of the project through its production lifecycle. How is the system configured and what is the risk tolerance? What are the key, underlying assumptions that enable a field development to take shape? One of the common challenges run into is project continuity, not only in personnel, but also in decision-making basis. Do the early field decisions follow through to the operations and startup philosophy? We can all recall someone saying “Who made this dumb design decision?” as we get into the operational phase.

In this specific project, we had a marginal (economic) field that was a long-distance tieback to an existing facility. Complicating the development was a challenging reservoir that was pushing the limits of HP/HT (High-Pressure / High-Temperature) for deepwater. Conventional development architecture would have elected a dual, subsea flowline configuration, allowing for segregation of fluids, as well as operating an HP/LP line as the reservoir(s) decline. However, due to the challenging project economics, there was a significant cost-savings opportunity to take a more ‘risk-based approach’ and consider a single, commingled flowline. This directly translated to a large CAPEX savings ($300MM+) for the 2nd heavy-wall, long distance pipeline that would no longer be needed.

However…this is often pocketed at the project phase and the direct impact on the operations team can be overlooked, since the real impact of that decision does not occur until years later. This may result in increased downtime due to the single flowline, increased chemical (OPEX) costs to provide some extra operating margin, or more complexity when the field expands / adds new wells….as in, future startups can be a real pain!

Enthalpy Lesson: Joule-Thomson Effect (“JT Cooling”)

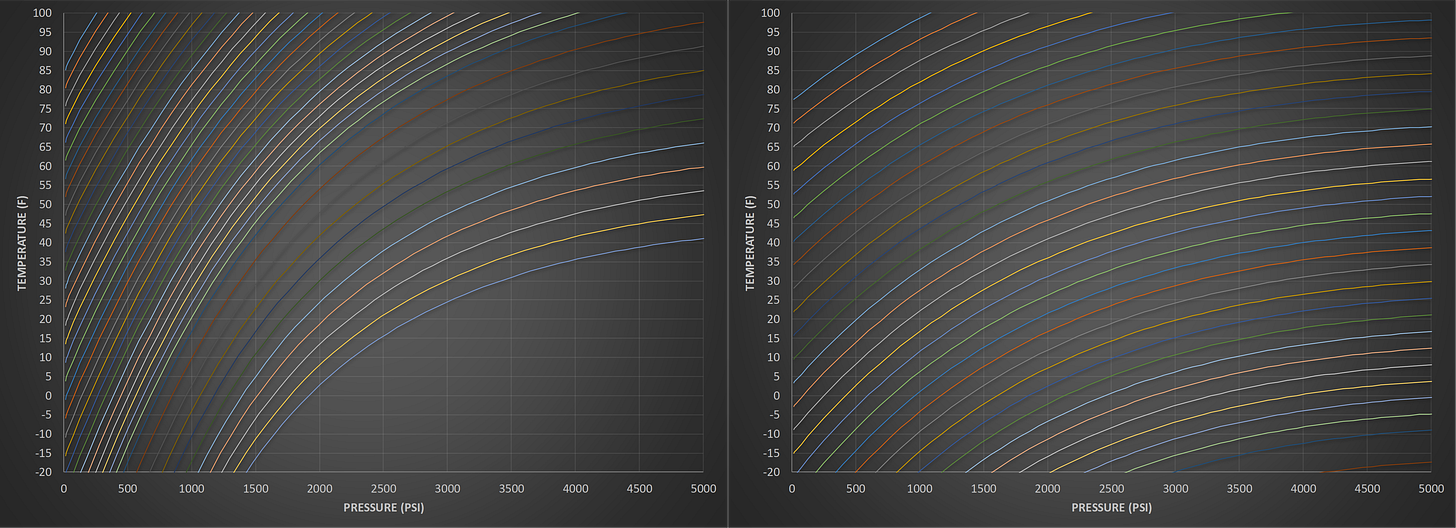

If you have worked in oil/gas for long enough, you have undoubtedly heard the term “JT Cooling”. Short for the Joule-Thomson effect, the isenthalpic expansion of gas will often result in a cooling effect, accelerated at lower pressures. Outside of oil/gas - and particularly relevant this summer - the most well-known application of the Joule-Thomson effect is in the refrigeration industry. JT Cooling is pressure-specific, as well as fluid-specific. The magnitude of any temperature change (DT) can fairly easily be determined from the shape of the enthalpy curves. As an example below, we show two fluids (methane and nitrogen, respectively):

Methane: 500 psi (70°F) → 100 psi: 42°F

Nitrogen: 500 psi (70°F) → 100 psi: 60°F

The steeper the slope, the more rapid temperature drop we expect. This is why - in the previous example (Field B) - once N2 was purged at the facility and we start to receive CH4, we actually saw a corresponding drop in temperature. The same operating conditions (pressure drop) when producing N2 yielded less temperature response. So, while waiting for the gas chromatographs to register a change in molecular weight to indicate arrival of CH4, which typically takes 5-7 minute cycle, we were aware that we had already transitioned once we saw the temperature drop due to the JT effect. High school chemistry, for the win!

Interestingly from the pictures above, you can see that as the operating pressures go up, the enthalpy slope gets flatter…and, if we keep increasing in pressure, is it possible that the slope changes? If so, what is the expectation on what may happen?

Reserve Joule-Thomson Effect (“JT Heating”)

For higher pressure systems, the slope of the enthalpy curves inverts. And, when we take a corresponding pressure DROP, we expect to see a temperature INCREASE. In the case for this field (Field C), we have an HP/HT well. So, for any pressure cut at greater than ~4000 psi will result in a temperature rise.

For HP/HT systems, these inverted enthalpy curves is expected to occur throughout the wellbore, both across the sandface (drawdown), as well as any other pressure drop location (i.e. wellhead choke). At the sandface, however, the localized temperature increase is in competition with the thermal equilibrium of the reservoir. So, the small element of fluid may be heated due to the Reverse JT Effect…but the fluids are being “cooled” by the surrounding reservoir. Thus, the observed impact on fluid temperature at the downhole temperature gauges may not be noticeable. However, further downstream (i.e. wellhead), a similar pressure drop will also yield a corresponding temperature increase. But, this effect is more localized / not dissipated and does not have the ‘large reservoir of reservoir fluid’ (pun intended). So, this is expected to translate to a noticeable temperature increase in the fluid.

In a brownfield development, we are often looking to control flowrate to (a) maintain production from existing wells and (b) stay within facility limits. When operating with a single flowline only, both of these may translate to taking a large pressure drop at the subsea choke…translating to the potential for localized JT Heating. Field surveillance is critical to ensure that any ‘abnormal’ behavior in pressure/temperature can be adequately explained (i.e. is this just physics/science or is something wrong?)

Why are any of the temperature issues relevant? Well, as much as we typically like to preserve heat in subsea systems, there are some negative consequences for increased temperatures:

Large pressure drops can result in fluid temperatures above reservoir temperature. When designing equipment specifications, it is common to rate equipment to a max service temperature of the reservoir. In this case, that may not be high enough. Have we rated the system for the correct maximum temperatures?

Similarly, subsea insulation (i.e. GSPU) may have upper temperature limits that could be exceeded if we have a significant “JT-Heating” effect at the flowline inlet.

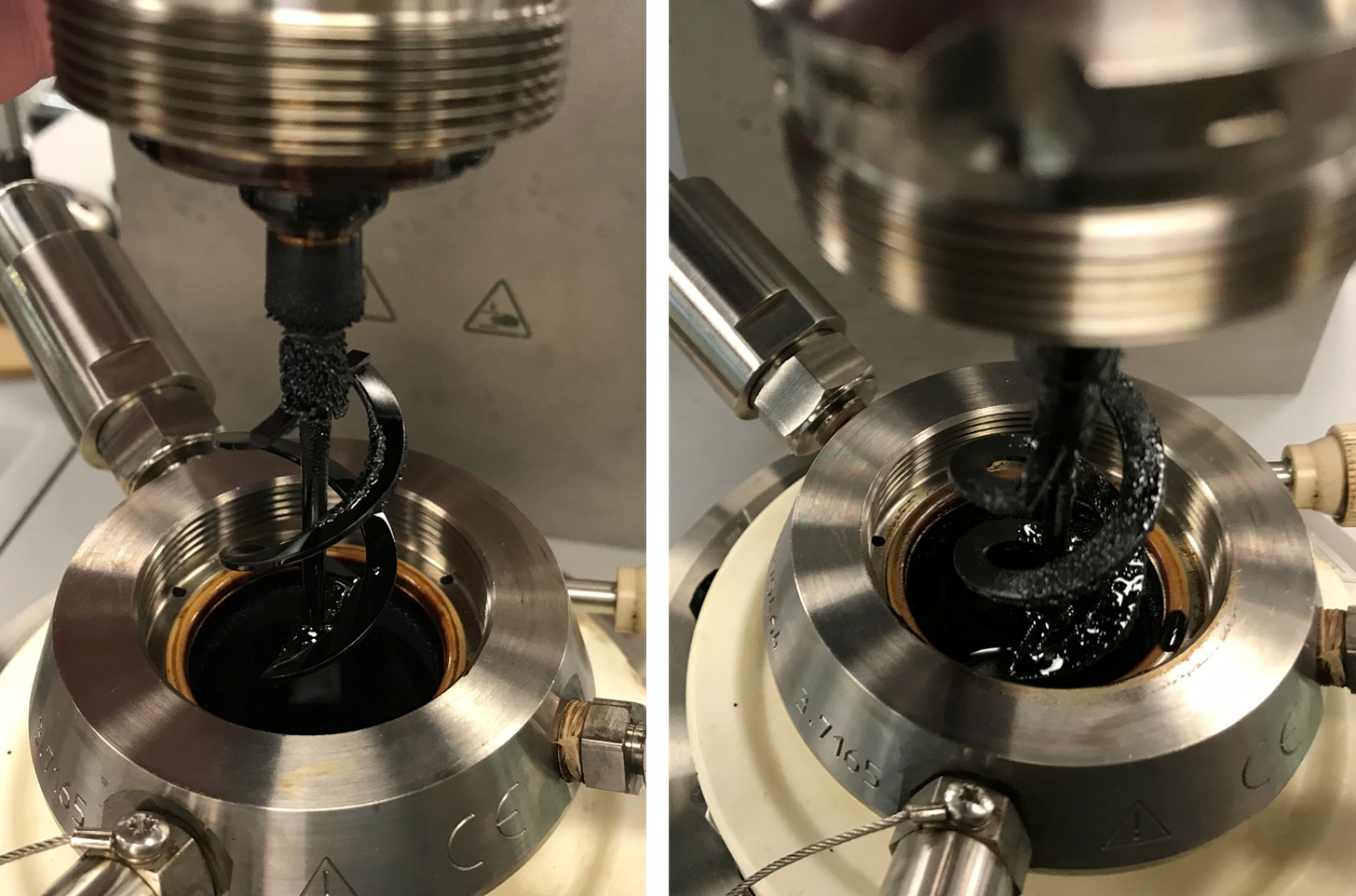

Corrosion rates are more severe and asphaltene deposition may potentially be worse at higher temperatures (see image below), resulting in a harder deposit that may be more difficult to remove and pose a risk for wellhead choke damage.

Fluid Properties (Asphaltenes): ROCK-ing the Choke

Introducing production from a new (adjacent) well doesn’t sound too difficult. In theory, once the infrastructure is fully commissioned and chemicals are available, the well is ready for start-up. In this case, there was a risk to manage, as the PVT fluid analysis report was not available. All we had was appraisal drilling done years prior, and contaminated with oil-based mud (OBM). While not understanding what was going to come up the well seems very risky, we understood we were going to produce a new well from a similar zone as the existing production field, i.e. saturation pressure, specific gravity, and Asphaltene Onset Pressure (AOP, the primary flow assurance risk) should be relatively similar. Production history told us the propensity for asphaltene precipitation (and risk for deposition) will need to be managed by continuous injection of asphaltene inhibitor (AI), which averaged in the range of $10,000 / day in chemical costs. Qualification tests using the incumbent chemical indicated good performance on the expected new fluids, which would allow the new well to operate with the same chemical as the rest of the field.

Basic nodal analysis had shown that target field rates could be met by active pressure management, while maintaining the current production rates from the old well. A second advantage with operations utilizing an active pressure management plan was to maintain pressures of the subsea system above the bubble point, thereby minimizing the risk for hydrate formation would continue. Maintaining current rates, adding new production, and not introducing another flow assurance risk?! Win. Win. Win!

The initial results looked impressive, and the team thought this was a great achievement. As expected, the well continued to unload the completion fluids along with new production. Operations performed a pressure build-up (PBU) prior to resuming production to understand the reservoir characteristics better. Then it happened. Everything runs great…until it doesn’t. Production rates suddenly began to decline and an increase in wellbore pressures was being realized. “Something” was obstructing the production in the wellbore. Could this be asphaltene deposits building? A bit difficult to know, when you are 20+ miles away and 3 miles below mudline.

Running checks to eliminate possible deposition scenarios included checking several things: temperature profile (high, eliminating possible wax deposition. Check); water breakout (apart from the completion brine) and no gas break out (eliminating possible hydrate formation. Check). The early hypothesis was it had to be asphaltenes. A slightly unconventional decision was made to step open the choke, and increase the AI dosage rate in an effort to dislodge the deposit. It worked! Until it happened again 4 days later, and then again approximately 4 days later, and again and again.

Each time the choke was “rocked”, the tubing pressures fell and an increase in production was seen. The pressure build-up and mechanical remediation supported a deposit theory. Could the effective dose rate of the AI be higher for the new fluids? It appeared early assumptions of “similar” fluids based on production zones were incorrect, and the new well fluids were just unique enough that the performance of the AI was worse in the new fluids compared to the existing production fluids. Unfortunately, clean samples could not be collected from the new well as it was still unloading the completion brine - more on that below - as well as producing into our single flowline, remember that decision?. Operations continued with high AI dosage rates until the well unloaded the majority of the completion brine.

With fresh, clean production fluids in hand, rigorous testing of the AI began. Several weeks into producing the new well, coupled with robust dose response curves of the AI confirmed early fears: the minimum effective dose rate of the AI was too high for acceptable production OPEX. The AI was not performing as expected compared to the first well, which also suggests the two crudes are fundamentally different at the molecular level from an asphaltene stability point of view. The path forward is to qualify an asphaltene inhibitor in laboratory deposition tests using actual fluids from each well (as opposed to analog fluids) and to establish an effective dose rate that meets the field requirements for production.

Completion Fluid Effects (HP/HT)

Returning to completion fluids, selection is much more onerous for the high pressure wells, leading to heavier brines that are often zinc-based (ZnBr). Challenges are largely ones of compatibility with other fluids (and production chemicals) that will be injected as part of the initial well start-up process, including umbilical storage/preservation fluids. For short tiebacks (or ones with multiple flowlines), there may be ways to handle the potential incompatibilities. However, for long-distance, single flowlines, the risk tolerance is much lower. Early integration between the Drilling & Completion (D&C) teams, chemical teams, and operations teams is essentially to plan expected fluid mix that will be coming onboard the facility.

Outcome: In this instance, the new well was brought on-line at target rates. Total field production goals were achieved and the cash register is ringing (especially at today’s oil prices!!). Good cooperation between subsurface, facilities, production, chemical and commercial teams were able to bring the new production into the existing flowline.

However, “close enough” in terms of fluid property assumptions proved to not be quite “good enough”. As the fluid mix (single well vs. dual well) changes, adjustments to the monitoring/surveillance program and chemical injection program are required to account for the additional well. It is not just added production, but ‘different production’, which brings with it a new set of KPIs that may be necessary, ranging from chemicals to pressure regimes within the single flowline.

Referencing back to the original design premise of a risk-based approach for a reduced CAPEX development, the significant upfront cost savings can be used to provide the operations teams with the necessary tools to effectively monitor and produce both wells. So, putting aside some contingency at the decision stage to account for a more complex operation should be strongly considered. Instead of $300MM, let’s knock it down to $290MM and get some extra gauges and possibly new chemicals! Overall, still a very positive net gain in the economics…as long as we didn’t forget to save a little money for a rainy day.

Start it up

Let me tell you we will never stop, never stop

Start me up

Never stop, never stop

Startups are intense. Startups are nerve-racking. Startups never seem to go as-planned. But, if you have never done one….you are missing out. That’s where the rubber meets the road and the learning curve accelerates. Aside from gaining confidence in the predictive models that we commonly use to design facilities, they offer the opportunity for real-time decision making and critical thinking. And tons of learning. A huge challenge in the offshore space is the price of failure - well costs, remediation/intervention costs, and well IPR rates all contribute to the need to “get it right” the first time. No pressure….

Specifically for any engineering company (vs. a hardware or chemical supplier), it can be difficult to prove value without hard numbers. A LinkedIn colleague of mine says “Show me your case studies” when advising her clients on what their value proposition is. Startups are where you earn those case studies. And the value can be described in many ways - cost savings, cash flow increases, reduced downtime, less environmental impact, novel product development, etc. All of this comes out on the back-end of successful and safe startups. Pontem has a few new ones on the board already this year…and looking forward to a few more coming soon…